- Published on

AI Specialist Course Case Study - Week 1

- Authors

- Name

- Jesús Herman

- Introduction

Introduction

In the context of the Artificial Intelligence Specialist course I am taking, we have been presented with the opportunity to complete a case study. It is an opportunity to put acquired knowledge into practice and develop new skills. We were given the chance to propose projects. And there are two (very different) ones that greatly interest me.

Investigating potential projects I have proposed

- Predicción del precio de una acción con ML.

- Detección de rostros y reconocimiento facial.

Chosen Project: Face Detection and Facial Recognition

- I have experimented with Tensorflow.js in the past and found it interesting.

- It's a fun project that I'm going to include my children in.

- Especially Aratz (the 7-year-old), who is very interested in computer interaction. I gave him an RFID card reader, and he spends all day interacting with it and the computer. He will enjoy seeing and participating in a project like this, and perhaps we can even integrate his reader in the future!

- Of everything I've learned so far about artificial intelligence, Computer Vision is probably one of the fields that interests me the most, due to its applications in many areas: health, security, production chains.

- Above all: I think it's something I'm going to enjoy and can continue to develop and improve in the future 😀.

How I am planning the project

- I want to leverage my experience in the Cloud Native environment, and therefore the project will be "containerized". I know there are similar and much more advanced applications than mine, but it is a good opportunity to create a project that can potentially be deployed in a Docker environment or even a k8s cluster.

- It is an opportunity to improve and develop my knowledge as a project manager/product owner. I am going to make a good definition of the project requirements, document it, and track the development. I don't think I'll go as far as planning a Kanban, although maybe I'll get excited and end up doing it 😂.

Starting by researching how to implement it and creating a GitHub repository

For the research, I mainly used different internet sources, both Google and YouTube. There are countless approaches and different uses of libraries. I also have the course book. I decided to initially document the requirements and implementation based on what I have seen in different sources. It is likely that I will have to revise this document in the future. The application has two well-defined parts:

- A learning and face detection model

- A web server that shows the video and data on the client while updating and interacting with the Google Sheets API The implementation of the web server and the interaction with the Google Sheets API is something I know from past experiments, and I think it is the part I know best.

Once I finished my research, I created a GitHub repository with the most complete Readme file possible. I have always been a staunch defender of the Lean approach, doing something quickly and iterating. But from my past experience in software projects, I know it doesn't hurt to have the most detailed documentation possible. The code is in the following repository: https://github.com/jhmarina/app-reconocimiento-facial.

Project Definition

This is a web application that uses real-time face detection and recognition. The application detects faces using a pre-trained model and displays the name of the detected person along with the time of detection. This data will be accessible on a real-time web page. This data will also be recorded in a Google Sheets spreadsheet.

That would be the project's goal. I think it's ambitious, but we have 60 hours to do it. I believe it's feasible.

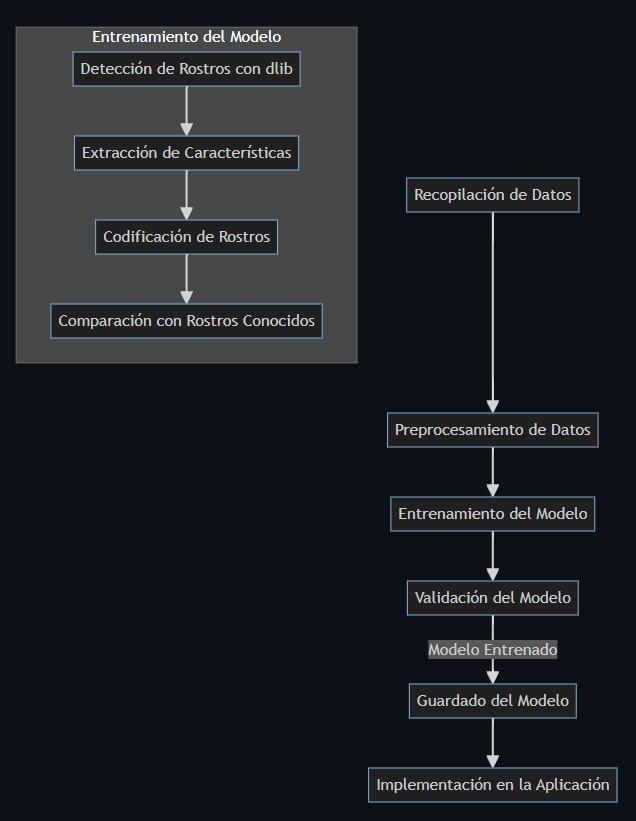

How will the learning model work?

- Data Collection: Training images of different people are collected with their respective labels (names).

- Data Preprocessing: The images are preprocessed, for example, by adjusting their size and format.

- Model Training:

- Face Detection with dlib: dlib is used to detect faces in the images.

- Feature Extraction: Important facial features are extracted from each face.

- Face Encoding: The facial features are encoded into a numerical feature vector.

- Comparison with Known Faces: Face encodings are compared with known face encodings to train the recognition model.

- Model Validation: The model's accuracy is validated with a validation dataset.

- Model Saving: The trained model (facial encodings and labels) is saved to a file for later use.

- Implementation in the Application: The saved model is implemented in the web application to perform real-time face detection and recognition.

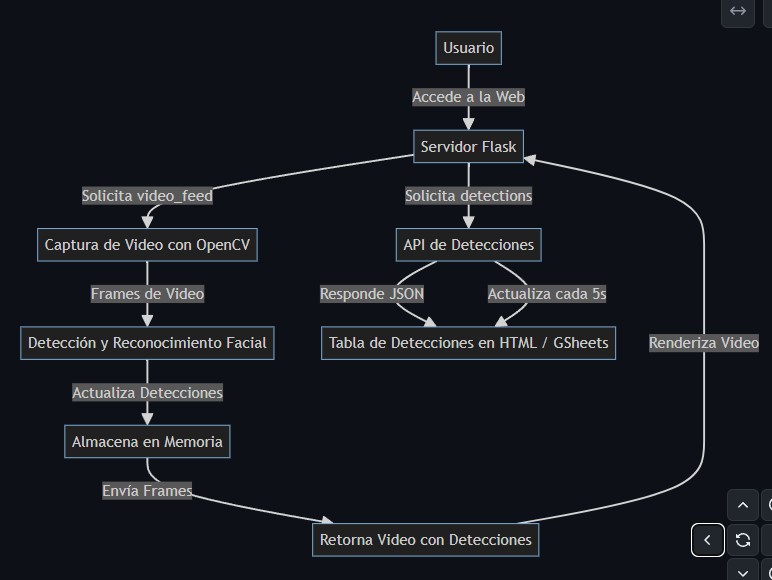

How will the web application work?

- User: The user accesses the web application from their browser.

- Flask Server: The Flask server receives the web access request.

- Video Capture with OpenCV: The Flask server requests the video feed, and OpenCV captures video frames in real-time.

- Face Detection and Recognition: Video frames are processed to detect and recognize faces using the face_recognition library.

- Update Detections: Detections (name and time) are stored in an in-memory list.

- Send Data to Google Sheets: Detection data is sent to Google Sheets using the Google Sheets API.

- Google Sheets Spreadsheet: Data is saved to a Google Sheets spreadsheet.

- Return Video with Detections: Processed frames are sent back to the Flask server, which returns them to the user's browser.

- Render Video: The user's browser renders the video with the superimposed detections.

- Detections API: When the browser requests the detections, the Flask server responds with a JSON containing the stored detections.

- Detections Table in HTML: The user's browser updates the detections table in the web interface every 5 seconds with the information received from the detections API.

End of the First Day Working on the Case Study

With this, I finish the first day of work on the case study. The next day I will start implementing these ideas with code. I am convinced that part of what I have documented will vary; I am proposing concepts based on what I have researched and still don't know exactly how I will implement them.